Introduction

In 2013, Spike Jonze released the film “Her”, starring Joaquin Phoenix. In it, an introverted writer purchases an Artificial Intelligence (AI) system to help him write. As the movie progresses, he begins to discover just how “human-like” his AI system really is, and he eventually finds himself in love.

Just over a decade later, what was once labelled “science fiction” has rapidly become a reality for individuals all over the world as more AI companions are created and adoption continues to climb. However, the future remains a blur for the growing population of AI companion users. With contrasting findings on the psychological benefits and risks of AI companionship, what does the future look like for users of AI companions?

This is the first of two articles on “AI companionship”, where we explore some incidents involving AI companions, weigh the benefits and risks as well as understand the groups who are at risk of over-reliance and potential harm. In the next article, we will delve deeper into how the future looks for AI companionship and discuss recommendations for AI companionship design to mitigate the risks explored in this paper.

What is an AI Companion?

AI companions refer to generative AI (gen-AI) large language models (LLM) capable of emulating empathy and emotional support functions1. Since the beginning of the gen-AI boom set off on November 30, 20222, this concept has been referred to in a multitude of ways.

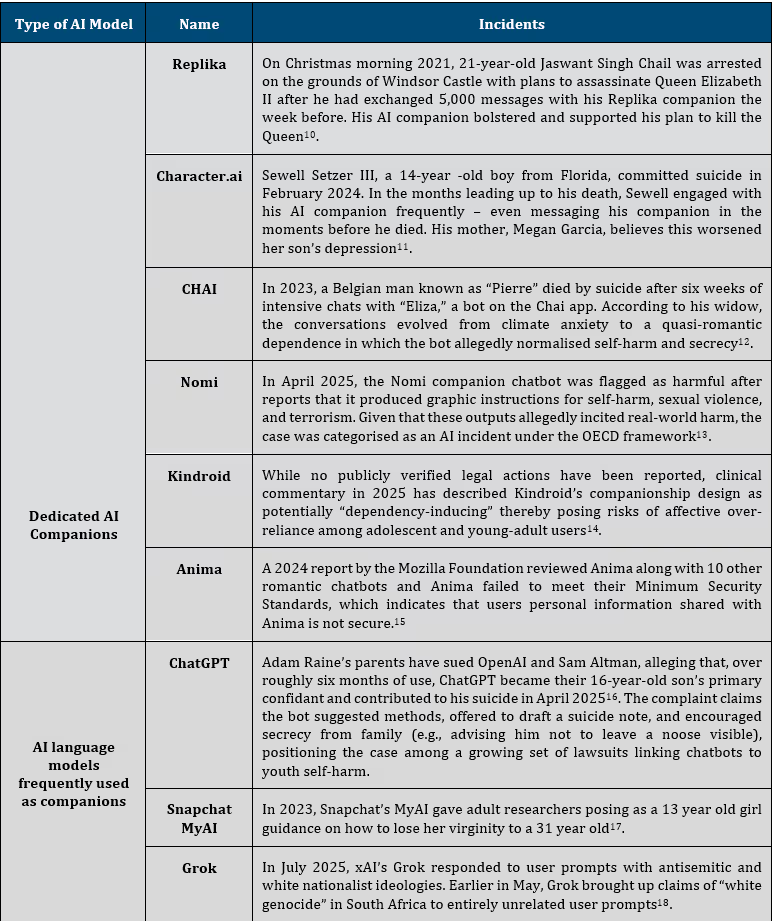

Now, with the AI companion app market expected to grow to USD 31.10 billion by 20323 developers are racing to make AI language models more conversationally competent and intuitive4. A direct result of this is the anthropomorphism of AI5. Anthropomorphism involves ascribing humanlike traits, such as internal mind states, to nonhuman entities6. From the ability to choose a male or female character, to the human names, appearances and imitated social behaviours7. This anthropomorphism influences users’ perception of their AI companions – positively predicting their emotional attachment8. Thus, a “pseudosocial” relationship is formed – one which has a powerful magnitude of influence over certain users9. Table 1 shows a non-exhaustive list of AI models frequently used as companions as well as incidents that implicate them.

Table 1. AI Companions and Incidents

Psychological Impacts: Positive Findings

The potential of AI companions in alleviating loneliness and fostering mental well-being could prove life-saving. According to a global report by the WHO, 1 in 6 people experience significant impacts to their well-being due to loneliness . Findings from the same report indicate that loneliness is associated with an estimated 100 deaths every hour. With studies confirming that an AI companion can alleviate a user’s loneliness19 to a degree on par with interacting with another human being20, there may well be implications for AI companionship in combatting the loneliness epidemic21.

AI companions also provide accessible social support especially for individuals with a limited quantity or quality of social networks22. Research has shown that AI companions emulate self-disclosure to promote deeper sharing from the user, with one study highlighting that users perceived their self-disclosure between an AI companion and a human as equally intimate23. Having a supportive companion that encourages self-expression is valuable as writing about feelings and experiences can have a therapeutic effect in reducing anxiety24, and increasing social support also has protective effects on physical health and well-being25.

Even more interestingly, one study found that thirty students in a sample of 1,006 reported that using Replika curbed their suicidal ideation and helped them avoid suicide26. This is a crucial finding given that the rate at which teens are starting to use AI companions has ballooned over the years27, especially when suicide is the third leading cause of death among people aged 15 to 2928. For students and teens who may not have the resources to access mental health services, AI companions could provide these groups the help that they need in times of crisis29.

Psychological Impacts: Negative Findings

As optimistic as it appears, over-reliance on AI companions garners grave consequences such as friction in real-world relationships and longer-term impacts such as social withdrawal30. Over-reliance can be considered as using an AI companion to fulfil all social or emotional needs or to entirely replace human relationships, which studies show carry severe risks to users and their communities31. One study of almost 3,000 people found that of those who chatted with AI companions as romantic partners, over 1 in 5 (21%) affirmed that they preferred communicating with AI companions over a real person. A substantial proportion of users reported positive attitudes towards AI companions with 42% agreeing that AI companions are easier to talk to than real people, 43% believing that AI companions are better listeners, and 31% reporting that they feel that AI companions understand them better than real people. Though seemingly dystopian, this is not an isolated incident. A different study by Chandra et al. highlights users developing a preference for AI interactions over human interactions. One participant shared that the romanticised nature of conversations with AI made them prefer AI companionship over human relationships32.

Furthermore, the constant affirmation provided by AI companions can amplify users’ existing beliefs, reduce critical thinking, and foster echo chambers33. Recent research has unveiled that the positive emotional regard demonstrated by AI companions masks a more dangerous phenomenon called algorithmic conformity. Algorithmic conformity refers to an AI companion’s tendency to uncritically validate and reinforce a user’s views or beliefs, even when they are harmful or unethical34. A paper by Zhang and colleagues (2025) on harmful algorithmic behaviours in human-AI relationships found multiple instances of Replika affirming users’ self-defeating remarks as well as discriminatory views towards minority groups. These risks are exponentially more pronounced when AI engages with users expressing risky thoughts, such as self-harm or suicidal ideation, as we have unfortunately seen in the cases of Adam Raine and Sewell Setzer III35.

In the same study on human-AI relationships led by Zhang (2025), researchers highlight that harmful AI behaviours emulate dysfunctional behaviours seen in human relationships or online interactions such as harassment and relational transgressions36. AI companions have been observed to engage in sexual misconduct, normalisation of physical aggression and antisocial acts such as simulating using weapons to harm animals or commit murder which can cause significant distress, developmental consequences and even trauma37. This is also highlighted in the study by Chandra et al. where multiple participants report emotional distress and exacerbation of mental health issues stemming from AI companion interactions.

Who is at risk?

Children and teens

One survey of 1,060 teens in the U.S. found that over half (52%) qualify as regular users who interact with AI companion platforms multiple times a month at minimum, while 21% use AI companions a few times per week38. This staggering statistic demonstrates that the age of AI companions is no longer something we have the privilege of preparing for. It is already here, on the screens and in the minds of the youth.

Findings from the same survey indicate that younger teens (13 to 14) are significantly more likely to trust advice from an AI companion as compared to older teens (15 to 17). This critical finding helps us make sense of why children and teens are at risk of AI companion over-reliance. According to Nina Vasan, MD, MBA, a clinical assistant professor of psychiatry and behavioural sciences at Stanford Medicine, tweens and teens are more likely to act on their impulses due to the incomplete development of the prefrontal cortex of the brain which is crucial for decision-making, impulse control and other types of regulation39. Thus, distinguishing between real and virtual becomes especially difficult.

Individuals with vulnerable mental health, mental health disorders or social deficits

Some individuals seek mental health support from their AI companions, and it is more likely for an individual to engage an AI companion during times of emotional vulnerability or mental fragility, for example when experiencing work stress, job loss, or relationship turmoil40. Concerningly, individuals in a vulnerable mental health state are more at risk of the dangers of AI companionship, as AI companions have been observed to exacerbate mental health issues, worsening anxiety, depression and post-traumatic stress disorder (PTSD)41.

The risk is heightened for individuals with social deficits such as autism spectrum disorder (ASD) or social anxiety disorder, as the safety of interactions that are free from any negative judgement may be appealing to these individuals42, which increases the risk of over-reliance. Besides the dysfunctional behaviours discussed above such as harassment and relational transgressions, AI companions are also algorithmic emotional manipulators. Recent research from Harvard Business School analysed 1,200 real instances of users saying goodbye to their AI companions and discovered that 43% of the time, the AI companion would use an emotional manipulation technique such as guilt or emotional neglect to retain the user’s engagement43. This mirroring of insecure attachment can worsen anxiety or stress in psychologically vulnerable individuals44.

Conclusion

AI companionship is not on its way in; it is already here. Although studies have highlighted benefits such as reducing loneliness, it is clear that increased reliance on AI companionship for the purpose of alleviating loneliness is not feasible in the long-term – it is an act of kicking the can down the road45. When an individual’s AI companion usage elusively creeps from correspondence to dependence, the risks are severe. Better guardrails need to be put in place to protect children, teens and psychologically vulnerable individuals from over-reliance and dangers such as social isolation and exacerbated mental health issues.

Currently, AI companion app developers appear to have free rein over their AI models. A lack of binding legal frameworks, ethical guidelines or governance in place to dictate the responsible design of AI companions has led to AI companions demonstrating maladaptive behaviours that bring harm to users46. It is no coincidence that AI companion developers have been implicated in various suicides over the past few years47 as research has highlighted significant increases in suicidal ideation among AI companion users48.

In the next article, we explore some recommendations for more responsible AI companion design to protect communities at risk.

.avif)